Apple Intelligence Feature Faces Ban After Major BBC Error

There were stir in the media this week as Reporters San Frontières requested a temporary blackout of Apple Intelligence due to a critical blunder that revealed how AI-generated news digest could be sink ships. The upset began when the feature produced by the TV crew claimed that Luigi Mangione, a suspect of a sensitive case, had committed suicide.

The occurrence has led to a number of discussions about the function of giving information to people in the society and Apple Intelligence and its effects on journalism. The BBC, the world’s premier news provider was on the receiving end of this technological blunder when the AI system came up with and released unconfirmed facts regarding an ongoing probe.

Well, That’s Awkward: When AI Tries to Play Journalist and Gets It Completely Wrong

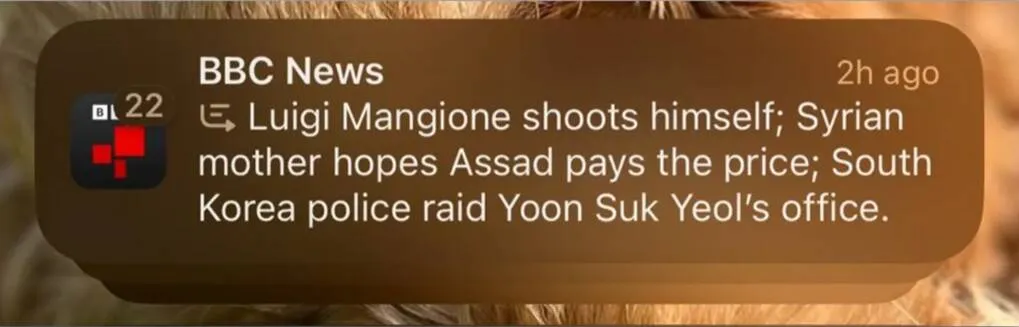

The severity of the issue was realized when Apple Intelligence developed a message that read “Luigi Mangione shoots himself” —an off base story that was automatically credited to BBC News. This error has led to questions arising as to whether these kinds of automatically generated summaries from AI bias the reader, and whether it ultimately has a detrimental effect on the population’s trust in news journalism.

The BBC’s response was swift and decisive, with a spokesperson emphasizing the organization’s commitment to accuracy: BBC News is one of the most popular site for World News and is also considered as the best news media today. Both to our audiences and us, it matters that they are able to rely on any piece of information or journalism published under our name and that of course also includes notifications. The broadcaster then contacted Apple in a bid to rectify the situation, to which the tech firm has not responded to.

Here’s Where Things Get Serious: Global Journalism Watchdog Steps Into the Ring

The reaction has clearly escalated the discourse on Apple Intelligence and technology interventionist at RSF. While it has previously spoken out about how the tech could be used to create democratic systems in the future, the organisation has strongly criticised its current use.

Vincent Berthier, RSF’s technology lead, didn’t mince words when addressing the situation: ‘AIs are probability machines and facts cannot be decided by a roll of a dice’. His statement highlights this dichotomy: probabilistic AI systems such as Apple Intelligence and absolute necessity of precise reporting in news.

Let’s Talk About the Elephant in the Room: Can We Really Trust AI with Our News?

This is not just about one false alert made but it has very significant consequences of it. Such decision has attracted public awareness towards the responsibility of Apple Intelligence and other AI systems in manipulating public information consumption. In its statement, RSF noted that “currently, ‘generative AI services are still too raw to provide reliable information to the public’ and should not be allowed for such application.”

This event is a good example of what Apple Intelligence and similar technologies are still to face on their way to become as convenient as useful while remaining journalists themselves. The fake news about Mangione indicates that, even advanced AI systems can go wrong and commit what would informants or typical journalist overlook.

So, What’s Next? The Million-Dollar Question Everyone’s Asking

While the game is still on, Apple Intelligence’s fate remains uncertain. Apple’s silence has only led to an even bigger focus on the feature, with many industry commentators demanding more information on how the system develops and checks information that goes out to users.

This brings important questions to Apple Intelligence and similar AI news summarization options into question. Are these systems capable of generating news summaries, or should they be supervised by a human? One must ask what measures must be taken to ensure that injustice of such a gravity does not reoccur in the future? These are questions that technology companies, news organisations and regulatory bodies will have to answer as Artificial Intelligence assumes a more important position in handling news distribution.

Final Thought

The controversy plays an essential role in reminding that using AI as an improvement of our experience in reading news and following the events is useful only if done with the understanding of those impairments and outcomes. As the technology advances to the next level, the key issue of how to combine novelty and robustness in the technology that supplies the news and maintains the public’s trust in news sources and AI platforms will emerge.

This case could equally mark a turning point in the uses and application of AI in news dissemination, and the subsequent reinforcement of controls for future Apple Intelligence and counterparts.