Microsoft Recall’s Privacy Promises Fall Short in Security Test

It’s a worrying development for those who champion privacy and the Windows users who may be counting on some much-needed extra security: Microsoft recalls promised security features falling short of expectations. Microsoft has promised that it has pulled a feature that was highly controversial, that was designed to help protect people’s privacy, and recent testing has shown that the feature is still capturing personal information such as credit card numbers and social security numbers.

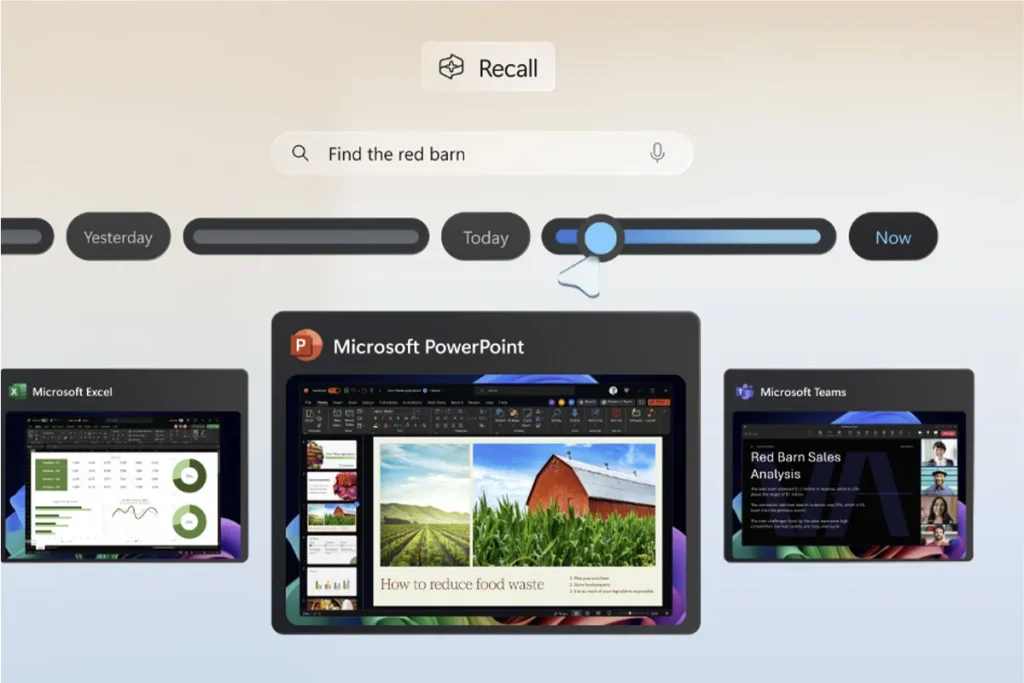

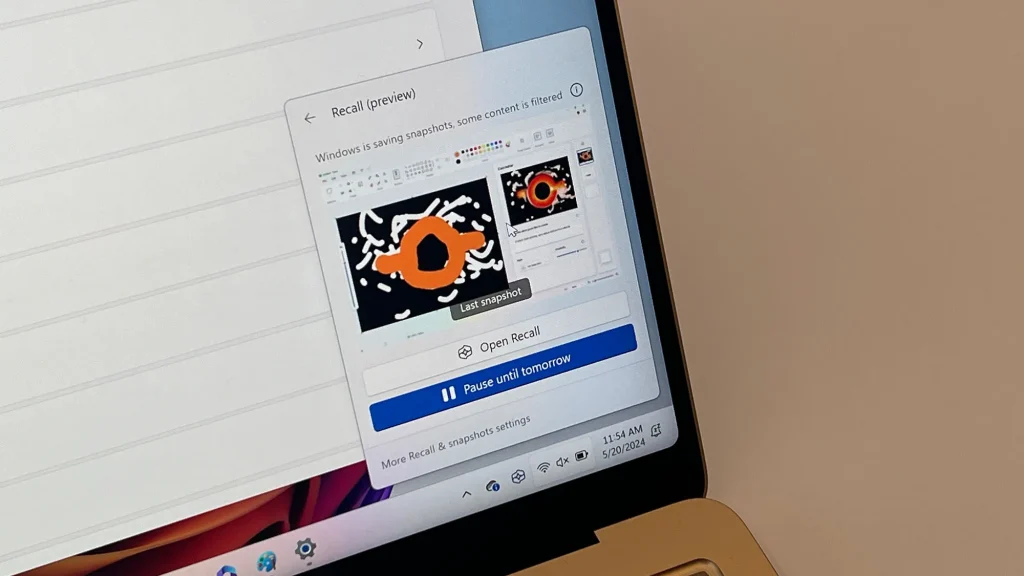

But the Microsoft Recall feature that was brought back to Windows Insider builds after being removed in June amid concerns around privacy was intended to include stronger security standards. The company had added what they dubbed a sensitive information filter, aimed at masking personal financial and identification data from capture.

Under the Hood: What’s Really Being Captured?

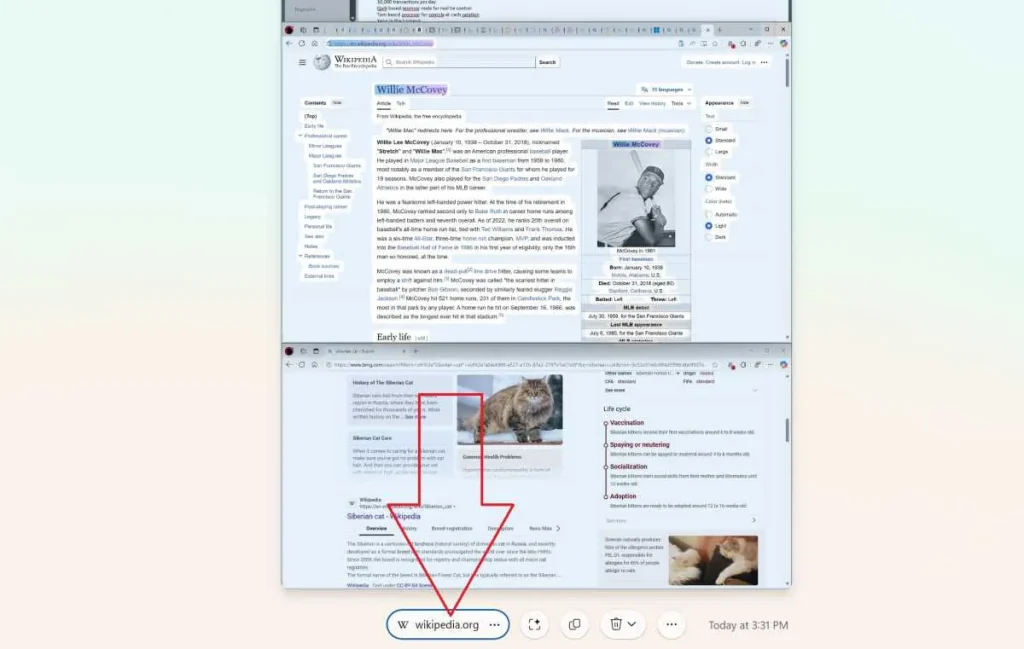

But tests by Tom’s Hardware have found gaping gaps in Microsoft’s Recall privacy protections. We discovered that the feature was able to capture entire credit card numbers and so social security information, even when sensitive information filter was enabled. The discovery runs so directly against what Microsoft told the world about the privacy features of the feature that I find it impossible to believe.

The thing that makes this quite nasty is Microsoft Recall is failing in the edge cases, and it’s happening all the time across a variety of scenarios. While the feature skips somes web pages involving sensitive data, protection does not persist across all instances.

The Technical Reality Behind the Promises

Microsoft Recall was supposed to have the revamped version of the feature as a more secure alternative. Captured screens are encrypted by Microsoft and biometric login requirements were also added as additional security. Nevertheless, these improvements do not sufficiently protect from the capture of sensitive personal information.

The problem is that Microsoft Recall is deciding what sensitive content is, a bit too well. Even with company’s sophisticated AI and edge computing, the feature to recognize – and omit – sensitive info is either unreliable at best or at worst.

Impact on User Privacy and Security

The news around Microsoft Recall does have significant data security and user privacy concerns. The feature is currently available only to Windows Insiders, but if the company makes it available to many more users, it could put them all at risk of privacy dangers they aren’t even aware of. This is particularly serious for users of computers that deal with them in an otherwise regular way.

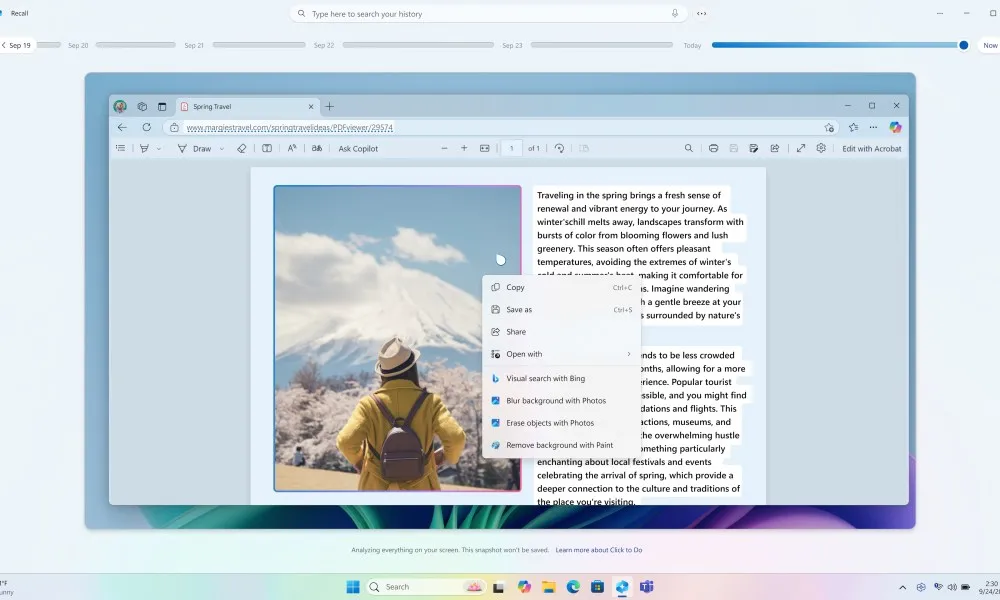

If Microsoft Recall can capture such sensitive data with privacy filters turned on, that suggests a broader discussion about the tradeoff between convenience and security in this form of computing. Those using this feature, even for potential productivity benefits, could be accidentally generating a repository of sensitive personal info.

Microsoft’s Response and Future Implications

It is uncertain how long Microsoft Recall will stay around as news of these privacy concerns spreads. When security concerns arose in June, the company had already shown it would be able to pull the feature. If Microsoft manages to shake off this latest discovery, it might have to rethink how it implements the feature a third time around.

Microsoft Recall serves as a lesson about the difficulty in executing AI powered feature while remaining on the rigorous path of privacy protections. It also calls for thorough testing and proving of end user privacy feature before reaching end users.

The Path Forward

Microsoft Recall’s struggles, a part of a wider industry battle between features and privacy protection, emphasize the difficulty involved. And like any other operating system, the more AI is incorporated into it, the more necessary foolproof privacy safeguards become.

While Windows Insiders testing Microsoft Recall for now should use care when testing the feature, in particular when viewing screens that contain sensitive data. As the situation also reminds users to periodically check their privacy settings and mind what information they let be taken into account by system features.

With this developments continuing, all eyes are on Microsoft and what steps they take to fix these serious privacy concerns. And the company’s response to this situation could have important precedents about how tech firms will treat privacy and security in AI features in the future.