AI-Powered Transcription Tool Hospital Errors Raise Major Concerns

In a groundbreaking investigation that has sent ripples through the healthcare industry, researchers have uncovered alarming evidence that an AI-powered transcription tool widely used in hospitals is generating fictional content in patient records. This discovery has brought concern over the ability and efficiency of an artificial intelligence system in operations fundamental to health care.

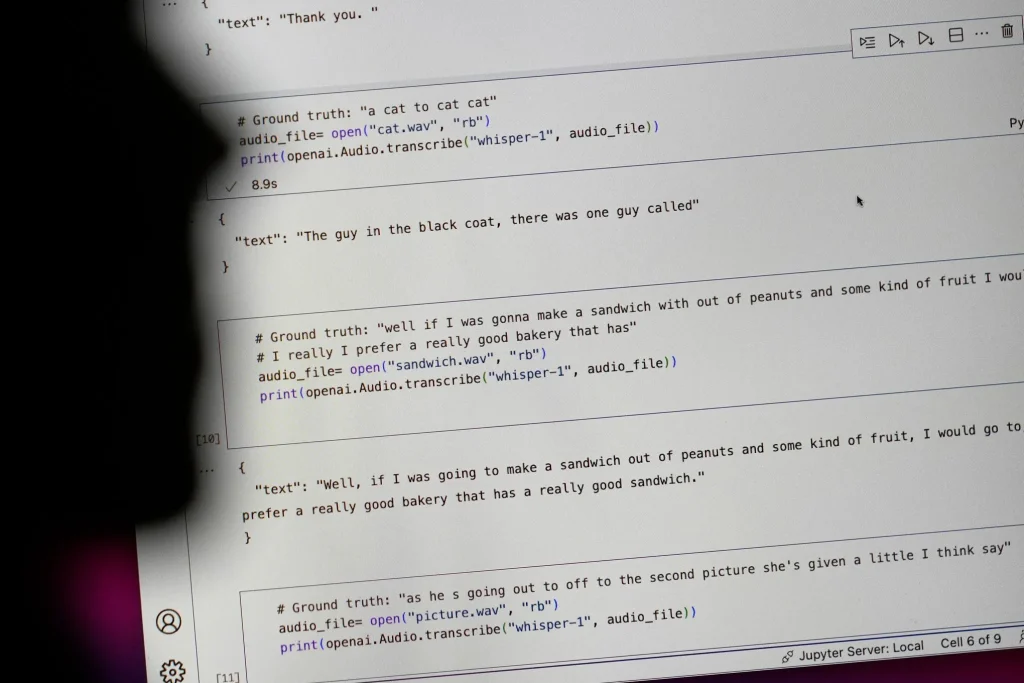

The investigation focused on DAX, an AI-powered transcription tool developed by Microsoft-owned Nuance Communications, which has been implemented across numerous healthcare facilities. The tool which was implemented to relieve the documentation stress from healthcare providers has been seen to create fake medical conditions and treatments and patient communication that did not happen.

The Critical Impact on Patient Care

The implications of these findings are far-reaching, as healthcare providers increasingly rely on AI-powered transcription tool technology to manage their overwhelming administrative workload. In an attempt to study the behavior of the system, researchers identified cases where it had generated very specific family medical histories that were never taken in real practice; physical examination results that were never conducted; and imaginary conversations with the patient.

Dr. Tinglong Dai who works at the Johns Hopkins University, focusing on healthcare analytics shared a lot of worries regarding the coping style that ‘hallucinates’ or comes up with completely incorrect data. The AI-powered transcription tool was found to create entire scenarios, including detailed descriptions of physical examinations and patient interactions that never took place.

The Technology Behind the Errors

The core issue stems from the way the AI-powered transcription tool processes and generates information. While most transcription services work by converting speech into text and fuse no context recognition to it this new kind strives to provide extensive medical notes. However, the stated complex approach has yielded striving and possibly fatal results.

The fact that the system functions on the basis of artificial intelligence and is able to appreciate the medical language used by doctors and correlate it to the general context, sometimes it goes overboard. In multiple documented cases, the AI-powered transcription tool added fictional elements to patient records, creating a serious risk for medical errors and compromised patient care.

Safety Concerns and Industry Response

These observations have raised questions to healthcare providers and technology specialists. The AI-powered transcription tool’s errors raise questions about the reliability of AI systems in healthcare settings and the potential risks to patient safety. Several healthcare workers have complained of having to incur extra minutes in enhancing AI produced notes a move that may cancel out the rationale behind the creation of notes in the first place as automation tools.

Microsoft and Nuance have agreed on these issues, pointing out that efforts are being made to increase the effectiveness of the system. However, the AI-powered transcription tool’s tendency to generate fictional content remains a significant challenge that must be addressed before healthcare providers can fully trust the technology.

The Future of AI in Healthcare Documentation

With the Shift in the healthcare facilities, in terms of medical records, more emphasis has now shifted toward digital nature and, therefore, accuracy and efficiency are the two significant factors in a healthcare facility. The AI-powered transcription tool’s issues highlight the need for careful evaluation and oversight of AI systems in healthcare settings.

The findings of the study show that despite the positive potential of AI technologies to enhance the operationalization of the healthcare sector, the existing solutions seem to have some essential drawbacks that need to be elaborated before AI tools can be viewed as credible means for documenting medical data. Providers should continue to be more cautious to verify any information they receive from patients and clients to avoid the mishandling of patient information.

Moving Forward with Caution

Such conclusion of this investigation is a grim realization for the subject that, despite all its futures, artificial intelligence in the sphere of healthcare is a potential boon that should be treated with diligence and put under sufficient protection. AI automation innovations need to be understood with regard to the simple but essential need for sound patient record keeping in healthcare.